Disaster recovery

Total disaster recovery

This procedure targets a global TPE cluster recovery in the following scenarios:

- Loss of all TPE nodes (or one node in standalone mode).

- Docker swarm cluster quorum loss when more than one node failed.

Before starting the recovery procedure, make sure to have a valid TPE Backup archive to be able to restore your data.

-

Install the Operating System on the needed server(s).

-

Redo the ThingPark Enterprise Cluster creation

WARNINGA limitation in the restore process causes the cluster configuration to be restored but not applied. Make sure to configure the new cluster like the old one.

You can recover the saved configuration with the following command:

tar -axf config.archive.tgz ./infra.yml -O -

Redo the Configuration and Deployment.

-

Connect to Cockpit.

-

Go to the TPE Backup module.

-

Click on Restore.

-

In "Restore source path", set the path where is mounted your TPE Backup archive

-

Click on Next.

-

Select the Backup you want to restore and click on Next.

-

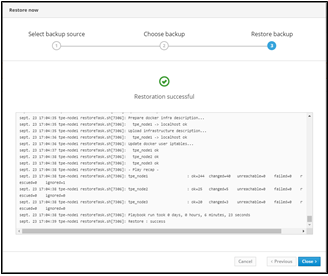

Wait until restoration is done.

Here is the expected final output:

-

Click on Close.

-

Go to the TPE Configuration Cockpit module, click Save & Apply to apply the configuration restored.

-

To finish, go to the TPE Services Cockpit module and check that all services are running and the TPE node(s) are ready.

High Availability node recovery

This procedure targets the recovery of one node of a ThingPark Enterprise HA cluster (hardware failure).

-

Install the Operating System.

-

Connect to one of the running TPE nodes. Execute an ssh command to connect to the server:

ssh support@${IP_OR_HOSTNAME_OF_TPE} -p 2222 -

Remove the node lost from Docker Swarm (old node reference) by running the 2 following commands:

docker node demote <node_name>

docker node rm <node_name>Where

<node_name>must be replaced by "tpe-node1", "tpe-node2" or "tpe-node3" following the node lost. -

Do the TPE Cluster Discover by running the following script:

tpe-cluster-discover -i -c '{"hosts": [ {"ip": "<IP address node1>", "hostname":"tpe-node1", "sup_pass": "<support_password>" }, {"ip": "<IP address node2>", "hostname":"tpe-node2", "sup_pass": "<support_password>" }, {"ip": "<IP address node3>", "hostname":"tpe-node3", "sup_pass": "<support_password>" }]}'Where:

<IP address node>must be replaced by the IP address of each node.<support_password>must be replaced by the support user password.

-

Connect to Cockpit (via a running node, not the node under re-installation).

-

Go to the TPE Services module, under TPE cluster operations click on Redeploy cluster:

-

You are prompted to confirm the cluster redeploy:

-

Click on Confirm.

-

Once the Cluster redeploy is done, go to the TPE Configuration module.

-

Click on Save & Apply.

-

To finish, go to the TPE Services module and check that all services are running and the three nodes are ready state.